Danish math major

Wiki Contributions

Comments

I don't understand how you are using incompleteness. For example, to me the sentence

"agents can make themselves immune to all possible money-pumps for completeness by acting in accordance with the following policy: ‘if I previously turned down some option X, I will not choose any option that I strictly disprefer to X.’"

Sounds like "agents can avoid all money pumps for completeness by completing their preferences in a random way." Which is true but doesn't seem like much of a challenge to completeness.

Can you explain what behavior is allowed under the first but isn't possible under my rephrasing?

Similarly can we make explicit what behavior counts as two options being incomparable?

It seems to me that FDT has the property that you associate with the "ultimate decision theory".

My understanding is that FDT says that you should follow the policy which is attained by taking the argmax over all policies of the utility from following that policy (only including downstream effects of your policy).

In these easy examples your policy space is your space of committed actions. In which case the above seems to reduce to the "ultimate decision theory" criterion.

The assumptions made here are not time reversible as the macrostate at time t+1 being deterministic given the macrostate at time t, does not imply that the macrostate at time t is deterministic given the macrostate at time t+1.

So in this article the direction of time is given through the asymmetry of the evolution of macrostates.

I think "book of X" can be usefully "translated" as beliefs about X.

The book of truth is not truth, just like the book of night is not night.

I think "book of names" can be read as human categoristion of animals (giving them name). Although other readings do seem plausible.

You might be interested in John Harsanyi on the topic.

He argues that the conclusion achieved in the original position is (average) utilitarianism.

I agree that behind the veil one shouldn't know the time (and thus can't care differently about current vs future humans). This actually causes further problems for Rawls conception when you project back in time, what if the worst life that will ever be lived has already been lived? Then the maximin principle gives no guidance at all, and in positions of uncertainty it recommends putting all effort in preventing a new minimum from being set.

The concept of Kolmogorov Sufficient Statistic might be the missing piece. (cf Elements of information theory section 14.12)

We want the shortest program that describes a sequence of bits. A particularly interpretable type of such programs is "the sequence is in the set X generated by program p, and among those it is the n'th element"

Example "the sequence is in the set of sequences of length 1000 with 104 ones, generated by (insert program here), of which it is the n~10^144'th element".

We therefore define f(String, n) to be the size of the smallest set containing String which is generated by a program of length n. (Or alternatively where a program of length n can test for membership of the set)

If you plot the logarithm of f(String,n) you will often see bands where the line has slope -1, corresponding to using the extra bit to hardcode one more bit of the index. In this case the longer programs aren't describing any more structure than the program where the slope started being -1. We call such a program a Kolmogorov minimal statistic.

The relevance is that for a biased coin with each flip independent the Kolmogorov minimal statistic is the bias. And it is often more natural to think about the Kolmogorov minimal statistics.

Then you violate the accurate beliefs condition. (If the world is infact a random mixture in proportion which their beliefs track correctly, then fdt will do better when averaging over the mixture)

I don't think the quoted problem has that structure.

And suppose that the existence of S tends to cause both (i) one-boxing tendencies and (ii) whether there’s money in the opaque box or not when decision-makers face Newcomb problems.

But now suppose that the pathway by which S causes there to be money in the opaque box or not is that another agent looks at S

So S causes one boxing tendencies, and the person putting money in the box looks only at S.

So it seems to be changing the problem to say that the predictor observes your brain/your decision procedure. When all they observe is S which, while causing "one boxing tendencies", is not causally downstream of your decision theory.

Further if S where downstream of your decision procedure, then fdt one boxes whether or not the path from the decision procedure to the contents of the boxes routes through an agent. Undermining the criticism that fst has implausible discontinuities.

Cant you make the same argument you make in Schwarz procreation by using Parfits hitchhiker after you have reached the city? In which case i think its better to use that example, as it avoids the Heighns criticism.

In the case of implausible discontinuities i agree with Heighn that there is no subjunctive dependence.

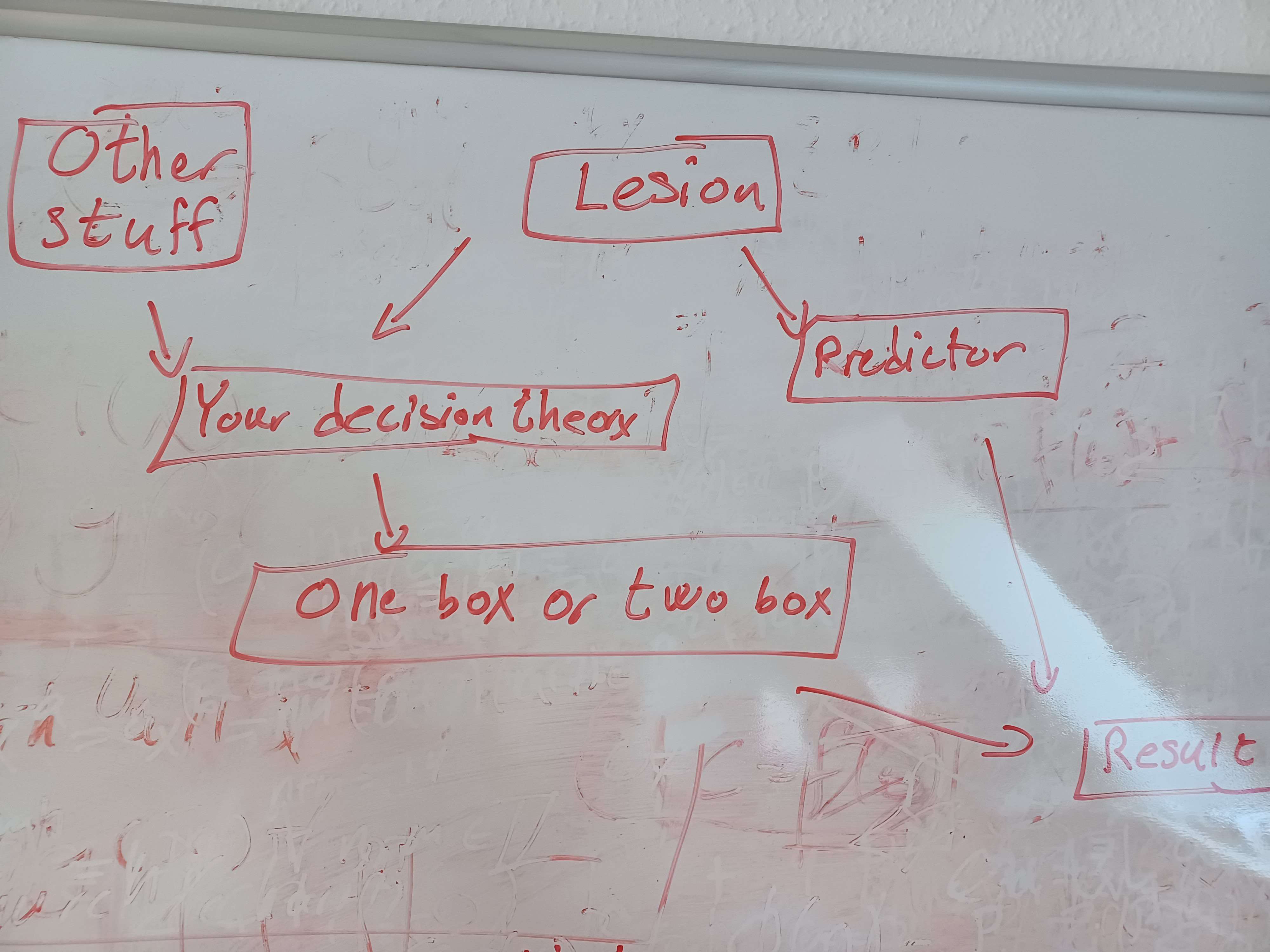

Here is a quick diagram of the causation in the thought experiment as i understand it.

We have an outcome which is completely determined by your decision to one box/two box and the predictor decision of whether to but money in the one box.

The Predictor decides based on the presence of a lesion (or some other physical fact)

Your decision how many boxes to take is determined by your decision theory.

And your decision theory is partly determined by the Lesion and partly by other stuff.

Now (my understanding of) the claim is that there is no downstream path from your decision theory to the predictor. This means that applying the do operator on the decision theory node doesn't change the distribution of the choices of the predictor.

The arguments in the Aumann paper in favor of dropping the completeness axiom is that it makes for a better theory of Human/Buisness/Existent reasoning, not that it makes for a better theory of ideal reasoning.

The paper seems to prove that any partial preference ordering which obeys the other axioms must be representable by a utility function, but that there will be multiple such representatives.

My claim is that either there will be a dutch book, or your actions will be equivalent to the actions you would have taken by following one of those representative utility functions, in which case even though the internals don't seem like following a utility function they are for the purposes of VNM.

But demonstrating this is hard, as it is unclear what actions correspond to the fact that A is incomparable to B.

The concrete examples of non complete agents in the above, either seem like they will act according to one of those representatives, or like they are easily dutch bookable.